Collaboration

Back in high school, I built robots with a group of exactly the sort of teenagers you’d expect to find in a robotics club. I was physically weaker than most of my peers, so I had to use tools and leverage to complete routine tasks that others could do with their bare hands. This gave me more practice with those tools than others were getting. When the tasks scaled up in difficulty – a stuck bolt turning out to be very stuck, a thicker sheet of metal turning out to be unusually reluctant to bend – my tool use tended to scale up quickly thanks to my constant practice with it, whereas peers who’d been brute forcing the task before would face the initial learning curve of the tool at that moment.

I think I’m doing a similar thing with collaboration. I have to try really hard to keep in touch with people because my default state includes mostly avoiding everyone. But by trying on purpose, I seem to have accidentally developed systems which scale better than the innate strengths which many take for granted in themselves.

And I’m writing about trying on purpose, weird though it feels, because several people have recently remarked on the quality of my collaboration. What they perceive as collaborativeness, I experience as a desire to work with my friends, and a recognition that the most effective way to do this is to make friends among my colleagues.

Assorted Git & Unix Tips

In my present role, I get to work with colleagues of delightfully diverse skill sets. Sometimes I’m learning directly from experts, and other times I get to learn by teaching in areas where I happen to have the local maximum of experience. One delightful colleague has a coding bootcamp background which rapidly exposed them to a lot of important topics, and I find myself helping to fill in the gaps where a couple hours of instruction don’t quite get the point across like years of practice.

I’m writing this to capture, in no particular order, some advice that I’m noticing myself giving consistently. I can only guess at how I accumulated it, and I cannot promise that there won’t be exceptions to any of these “rules”. But I suspect someday in the future I’ll be interested in looking back on notes like this, so onto the blog they go!

DIY Shoe Chains

The Pacific Northwest is dangerously frozen at the moment. Other regions handle conditions like this just fine. But a freeze like this is especially dangerous to us because most people are unprepared for it.

Right now we’ve got a bunch of frozen sleet on the ground that looks like snow, but offers the traction of an ice skating rink. This morning I thought I could cross the “snow” in regular shoes, and fell (embarrassingly, but non-injuriously) immediately. I was able to go inside and put on my shoe chains because I keep a pair on hand for occasions like this, and with chains the ice was as easy to walk on as snow would be. But it got me thinking about people who might not have thought ahead to own shoe chains. You can’t just order a pair for use today; nobody’s delivering anything in this weather.

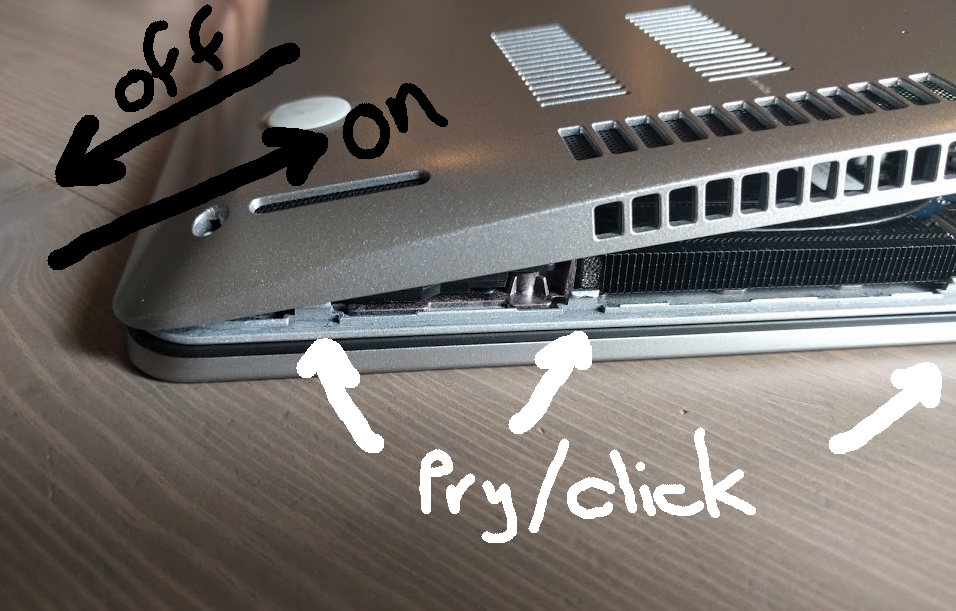

So I did a quick experiment to see whether adequate shoe chains could be assembled out of stuff that a normal person might have on hand. All the commercial ones really are, after all, is a piece of something stretchy and some chain. Image below the fold.

Making Dice

If you’re here for pretty pictures of dice, prepare to be disappointed. Making adequate dice is easy, but taking good photos of them exceeds my current skills.

In today’s standard “how I spent my winter vacation” small talk, I showed some colleagues a dice set that I made last week, and they seemed surprised when I explained that it was relatively easy.

Making excellent or perfect dice is not easy, but I’m not trying for excellent or perfect. I’m trying for “nice to look at” and “capable of showing random-feeling numbers when I roll them”. Those goals are easy to achieve with cheap products from the internet.

..more:

If you want to learn to make good dice, go watch Rybonator on YouTube, and the algorithm will start suggesting other good channels as well.

Next I’m going to share some Amazon links, and a note of the approximate price at time of writing. They aren’t affiliate links because signing up for the affiliate program requires more paperwork than I feel like doing right now. But they are the specific items I’ve been using to get pretty-okay dice out of.

The basic materials you’ll need to make dice are:

- A dice set mold (~$8). Good molds are a solid slab of material. This is not a good mold, but it does make dice.

- Epoxy resin. I got the 16oz of this set (~$9) because it was the cheapest option that seemed adequate, and it was cheap, and it was adequate. When warmed in boiling water before mixing, it has very few bubbles, and it cures overnight if left in a warm place.

- Clean disposable cups and popsicle sticks or equivalent stirrers. Grab these from your recycling or dollar store. ($0-$2)

- Waterproof gloves that you don’t mind getting resin and paint on, if you don’t want high-tech chemicals on your skin.

A dice mold and resin are enough to get you some transparent dice, but that’s boring. You can include household objects like game pieces or beads in the dice, and you can buy additives specifically designed for resin casting:

- Mica powder like this colorful set (~$7) or this metallic set (~$10) gives a shiny metallic or pearlescent look

- Dye like this set (~$10) gives the resin an even, transparent color.

- Glitter like this assortment (~$11) can sparkle like tiny stars, contrast pleasantly with dyed resin, or just display interesting behaviors where the larger pieces sink if the resin is too warm and the smaller pieces remain in suspension.

Plan what you want to include in a given dice set before starting to mix the resin. I find that 40ml (20ml each of resin and hardener) is just right for a single pour of the mold linked above. Then it’s just a matter of following the directions for the resin. After mixing, you can split the resin into several different disposable cups if you’d like to pour different colors together.

Once the dice are hard, unmold them and be amazed! If you want the numbers to be visible, consider flooding them with whatever paint you have on hand, then wiping off the excess with a paper towel. The molds emboss the numbes into the dice, so the number will be the only paint remaining after you wipe it.

If the dice come out too rough, zona papers (~$12) are popular for sanding to a glass-like finish.

If the dice have huge bubbles, you can fix them with UV resin (~$10) and an ultraviolet bulb. The trick to the UV stuff is making sure that the wavelength required by the resin (405-410nm) is included in the spectrum emitted by the lamp (385-410nm). You may already have a UV lamp around if you do gel nails or resin printing.

I find that bubbles often show up in the corners of dice, and sticking some clear tape to the sides I’m mending with UV resin helps keep it where it belongs while letting the light in to harden it. I’ve also gotten some fun effects by painting the inside of the bubble a contrasting color before filling it with UV resin. After repairing bubbles with UV resin, the affected sides often need to be flattened out with a file or coarse sandpaper before polishing with fine sandpaper or zona papers.

In making several sets of increasingly less-bad dice, I’ve noticed that some techniques seem to yield better outcomes:

- Start with the resin really hot. I set both prats of the epoxy in a container of almost boiling water before use, then dry them off and measure it out immediately. Hot resin flows better.

- When using large glitter, make sure to get some glitter-free resin into the very bottom of each die first, or stir it. Big inclusions have a nasty habit of blocking the resin from getting into the tip of the D4.

- Place the mold on a plate or tray before pouring, and do not remove it from the tray until the dice are hard. Bending the mold at all changes the volume of the cavities, which presses resin out then sucks air in.

- Smear some resin on the lid before capping the mold, and slowly roll the lid onto the mold. Setting the lid straight down allows air to be trapped under it in the middle.

- Over-fill the mold cavities slightly.

- Expect heavier inclusions, like large glitter, to sink to the bottom when the resin is poured hot. The pros often wait for the resin to get tacky before pouring part of a die, but that’s advanced technique and I have yet to try much of it.

- Paint can be easily removed from the dice numbers with an ultrasonic cleaner. Don’t try to clean the dice this way if you want the paint to stay in!

This barely scratches the surface of dice-making, and there are better resources on every topic for becoming an expert, making custom molds, and other advanced topics. Although it’s very hard to make excellent dice, it’s shockingly cheap and easy to make mediocre dice, and mediocre dice are often more than adequate to have fun with.

Retroreflectors & Storing Ground Glass

Retroreflectors are fun. I finally got around to picking up some cheap glass blast media today (mine’s the 40/70 grit recycled bottle glass from Harbor Freight / Central Pneumatic) and did some testing with various paints and glues that I had lying around. I’m using it as retroreflective beads. When I hear “beads” I think of things with holes in them for putting on a string, but in this case it means more like beads of condensation – tiny round blobs. They feel gritty like beach sand, and being made from clear glass, they look like unusually sparkly white sand as well.

DIY Thimble

Over the past couple weeks, my schedule has had a higher than usual concentration of the kind of meetings where one sits off-camera and listens to a presenter talk. Like many engineers who knit in meetings, I find that keeping my hands busy helps me focus. Knitting puts me on the losing side of a battle between “don’t drop any stitches” and the laws of physics, however, so instead I’ve been hand sewing quite a bit.

Lumenator

About a year ago, I found out about lumenators. The theory is that if you put sunshine-ish amounts of light onto a creature, the creature reacts as it would in sunlight.

So, I built one. It’s technically brighter than the sun.

Playing Dress-Up With Starlink

Some friends showed me a post investigating whether Starlink dishes still work when decorated in various ways. They asked whether I was able to reproduce the results. So I pulled the dish down off my roof and tested it with a few things that I had lying around the house and yard.

Methodology Notes:

I gathered data in my camera roll: a snapshot of the setup, followed by a screencap of the “router <-> internet” pane of the speedtest within the Starlink Android app. I left the dish set up in the same spot, which was at ground level but reported no obstructions when I did the sky scan thing in the app. I power cycled the dish once during the experiment, roughly halfway through the control tests for the “is it slower?” question, because I needed to plug something else into the extension cord that it was using for a minute.

I’m located south of the 45th parallel.

Will It Send?

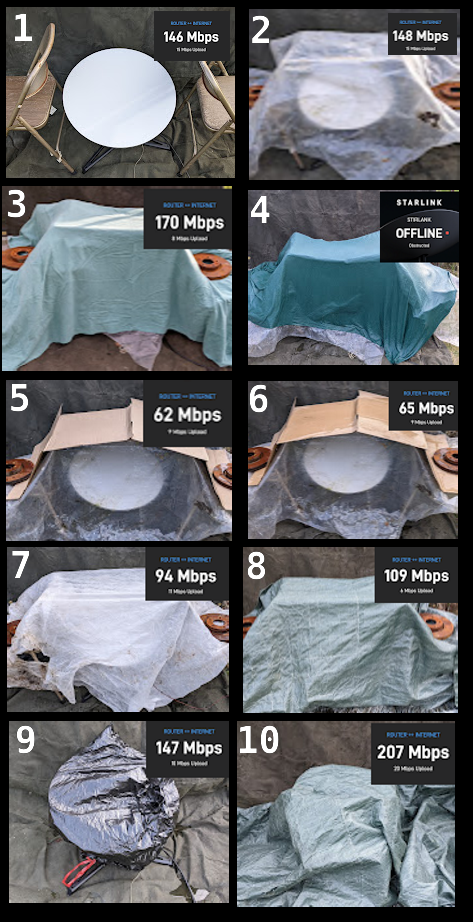

For my first batch of tests, I was curious whether I could get any data in and out via Starlink with various configurations of stuff on and around the dish. I only ran one speedtest each for these.

I started by suspending things over Starlink, because I knew some of the materials would get heavy (such as wet cotton cloth) and I was scared of hurting the little motors inside that it uses to move itself around. I draped a canvas tarp over the north side of a fence to make an impromptu photo studio for the dish. The dish had an unobstructed view of the sky upward and to the north. I placed metal folding chairs to the east and west of the dish, with their backs closest to the dish, to hold up the various covers. I used some old brake pads to weigh down the things that I draped over the chairs so they wouldn’t blow away.

- No cover, control to make sure Starlink works in this position. It sends. 146 Mbps down, 15 Mbps up.

- Covered with clear-ish greenhouse plastic. It sends. 148 Mbps down, 15 Mbps up.

- Same as (2) but with a cotton bedspread over the plastic. It sends. 170 Mbps down, 8 Mbps up.

- Same as (3) but with the bedspread soaking wet. It does not send. The app reports “offline, obstructed”.

- Plastic from (2) plus a single layer of corrugated cardboard box. It sends. 62 Mbps down, 9 Mbps up.

- Same as (5) but I dumped some water on the box. It mostly ran off. It sends. 65 Mbps down, 9 Mbps up.

- Double layer of row cover, like you put on plants in the garden to keep the frost from hurting them. It sends. 94 Mbps down, 11 Mbps up.

- Single layer of ratty old woven plastic tarp. It sends. 109 Mbps down, 6 Mbps up.

For the final 2 tests, I got a bit braver about putting things directly on the dish, instead of just suspending them above it.

- Dish in a black plastic trash bag like you’re throwing it out. It sends. 147 Mbps down, 10 Mbps up.

- Same tarp as from (8), but directly on the dish instead of hanging above it. It sends. 207 Mbps down, 20 Mbps up.

Is It Slower?

As you’ll notice in the numbers that I was getting in the above trials, sometimes covering the dish yields a faster single speed test than having it exposed. That seems wrong. I suspect that this is normal variance based on what satellites are available, but I don’t actually know what behavior is normal. So I did a few more tests in configuration 10, and a bunch of tests in configuration 1, to make sure we’re not in a timeline where obstructing a radio link somehow makes it perform better.

| Mbps Down | Mbps Up |

|---|---|

| 207 | 20 |

| 173 | 5 |

| 138 | 11 |

| 124 | 13 |

| 180 | 19 |

| 101 | 9 |

| Mbps Down | Mbps Up |

|---|---|

| 104 | 24 |

| 114 | 4 |

| 119 | 10 |

| 100 | 16 |

| 111 | 14 |

| 147 | 5 |

| 147 | 5 |

| 97 | 15 |

| 218 | 15 |

| 87 | 15 |

| 176 | 16 |

| 192 | 6 |

| 125 | 6 |

I left it all out overnight, and the canvas tarp that I’d been using as a visual backdrop blew off of the fence so it was covering the dish. I made a video call on the connection with the canvas over the dish and didn’t notice any subjective degradation of service compared to what I’m accustomed to getting from it.

Conclusions

Starlink definitely still works when I put dry textiles between the dish and the satellite. It doesn’t seem to matter if the textiles are directly on the dish or suspended a couple feet above it.

I’m surprised that Eric Kuhnke’s Starlink worked with 2 layers of wet bedsheet over it. The only case in which I managed to cause my Starlink to report itself as being obstructed was when I had a wet bedspread suspended in the air over it. I don’t really want to start piling wet cloth directly on the dish, though, because wet cloth is heavy and I’m scared of overloading the actuators.

Starlink gets kind of warm during normal operation, as demonstrated by the cats. I wouldn’t want to leave mine in a dark colored trash bag or under a dark colored tarp on a sunny day in case it overheated. And there’s no need to – if you don’t want people to know you’re using one, you can just suspend an opaque and waterproof tarp above it and there’ll be better airflow around the dish itself and thus less risk of overheating.

Have fun!

tree-style tab setup

How to get rid of the top bar in firefox after installing tree style tab:

In about:config (accept the risk), search toolkit.legacyUserProfileCustomizations.stylesheets and hit the funny looking button to toggle it to true.

In about:support, above the fold in the “Application Basics” section, find Profile Directory.

In that directory, mkdir chrome, then create userChrome.css, containing:

#main-window[tabsintitlebar="true"]:not([extradragspace="true"]) #TabsToolbar

{

opacity: 0;

pointer-events: none;

}

#main-window:not([tabsintitlebar="true"]) #TabsToolbar {

visibility: collapse !important;

}

top/htop in windows is ctrl+shift+esc

Helping a neighbor with a windows update issue today, I explained that asking a Linux admin to use Windows is like asking a Latin speaker to translate a document from Spanish. Most of the concepts are similar enough that they’ll be more helpful than a monolingual English speaker, but good guessing is not the same as fluency.

Since it was a problem with good directions on how to fix it, the process mostly went smoothly. As I complained to a Windows admin friend afterwards, it was fine except all the slashes in paths were backwards, and I couldn’t find the command prompt equivalent to Linux’s top or htop.

My Windows friend pointed out the obvious solution to me: The windows equivalent to top is not a command at all, but rather the task manager GUI. Next time I need top or htop in Windows, I’ll try to remember to hit ctrl+shift+esc to summon that interface instead.

And next time I’m searching the web for “windows command prompt top” “windows equivalent of top command”, and other queries that assume it’ll let me live in the terminal like my preferred operating systems, I might just end up back on this very post. Hi, future me!

transcription with mplayer and i3

I recently wanted to manually transcribe an audio recording. I prefer to type into LibreOffice Writer for this purpose. Writer has an audio player plugin for transcription, but unfortunately its keyboard shortcuts didn’t work when I tried it.

I just want to play some audio in one workspace and have play/pause and 5-second rewind shortcuts work even when another window is focused.

Since I am using i3wm on Ubuntu, I can glue up a serviceable transcription setup from stuff that’s already lying around.

The first challenge is to persuade an audio player to accept commands while it’s not the window in focus. By complaining about this problem to someone more knowledgeable than myself, I learned about mplayer’s slave mode. From its docs, I learn that I can instruct mplayer to take arbitrary commands on a fifo as follows:

$ mkfifo /tmp/mplayerfifo

$ mplayer -slave -input file=/tmp/mplayerfifo audio-to-transcribe.mp3

Now I can test whether mplayer is listening on the fifo. And indeed, the audio pauses when I tell it:

$ echo pause > /tmp/mplayerfifo

At this time I also test the incantation to rewind the audio by 5 seconds:

$ echo seek -5 > /tmp/mplayerfifo

Since both commands work as expected, I can now create keyboard shortcuts for them in .i3/config:

bindsym $mod+space exec "echo pause > /tmp/mplayerfifo"

bindsym $mod+z exec "echo seek -5 > /tmp/mplayerfifo"

After writing the config, $mod+shift+c reloads it so i3 knows about the new shortcuts.

Finally, I’ll make sure this keeps working after I reboot. I’ll make an alias in my ~/.bashrc to save having to remember the mplayer incantation:

$ echo "alias transcribe='mplayer -slave -input file=/tmp/mplayerfifo" >> ~/.bashrc

And to automatically create the fifo once on boot:

$ echo "mkfifo /tmp/mplayerfifo" >> ~/.profile

Now after I source ~/.bashrc, I can play media with this transcribe alias, and the keyboard shortcuts control it from anywhere in my window manager.

irssi and libera.chat

I’m in some channels that are moving from Freenode to Libera.

My irssi runs on a DigitalOcean droplet, and whenever I try to connect to Libera from that instance, I get the error:

[libera] !tungsten.libera.chat *** Notice -- You need to identify via SASL to use this server

Libera’s irssi guide (https://libera.chat/guides/irssi) says how to connect with SASL, and down in their sasl docs (https://libera.chat/guides/sasl) they mention that SASL is required for IP ranges that are easy to run bots on... including my VPS.

The fix is to pop open an IRC client locally (or use web IRC), connect to Libera without SASL, and register one’s nick and password. After verifying one’s email address over the regular connection, the network can be reached via SASL from anywhere using the registered nick as the username and the nickserv password as the password.

Obvious in retrospect, but poorly SEO’d for how the problem looks at the outset, so that’s how I worked around problems reaching Libera from Irssi on a VPS.

Assembly Lines

This time last year, my living room was occupied by a cotton mask production facility of my own devising. I had reverse engineered a leftover surgical mask to get the approximate dimensions, consulted pictures of actual surgeons’ masks, and contrived a mask design which was easy-enough to sew in bulk, durable-enough to wash with one’s linens, and wearable-enough to fit most faces.

Tinkering with and improving the production line was delightful enough to make me wonder if I’d missed a deeper calling when I chose not to pursue industrial engineering as a career, but the actual work – the parts where I used myself as jsut another machine to make more masks happen – was profoundly miserable. At the time, it made more sense to attribute that misery to current events: The world as we knew it is ending, of course I’m grumpy. I took it for granted that the sewing project of making masks was equivalent to the design/prototype/build cycle of my more creative sewing endeavors, and assumed that it was supposed to be equally enjoyable.

A year later, however, I’m running a similar personal assembly line on an electrical project, and noticing some patterns. I have to do 4 steps each on 96 little widgets to complete this phase of the project. My engineering intuition says that the optimal process would be to do all of step 1, then all of step 2, then all of step 3, then all of step 4. That seems like it should be the fastest, and make me happy becuase it’s the best – no wasted effort taking out then putting away the set of tools for each step several times.

The large-batch process would also yield consistency across all of its outputs, so that no one widget comes out much worse than any other. Consistency is aesthetic and satisfying in the end result, so the process which yields consistency should feel preferable... but instead, it feels deeply distasteful to stick with any one production phase for too long. What’s going on there? What assumption is one side of the internal argument using that the other side lacks?

It took me 2 steps over about 24 of the widgets to figure out what felt so wrong about that assembly-line reasoning: The claims of “best” and “fastest” only hold if the process being done remains exactly the same on widget 96 as it was on widget 1. That’s true if a machine is doing it, but false if the worker is able and allowed to think about the process they’re working on. Larger batch sizes are optimal if the assembly process is unchanging, but detrimental if the process needs to be modified for efficiency or ease of use along the way. For instance, I’d initially planned a design that needed about 36’ of wire, but by examining and contemplating the project when it was ready to be wired up, I found a way to accomplish the same goals with only about 23’ of wire. If I’d been “perfectly efficient” in treating the initial design as perfect, I would likely have cut the wire into the lengths that were needed for the 36’ plan before the 23’ design occurred to me, and that premature optimization would have destroyed the materials I’d need to assemble the more efficient design once I figured it out.

In other words, a self-modifying assembly line necessarily shrinks the batch size that it’s worth producing. I’ve seen the same thing in software – when automating a process, it’s best to do it by hand a couple times, and then test a script on a small batch of input and fix any errors, and then apply it to larger and larger batches as it gets closer and closer to the best I can get it. It’s just easier to notice the phenomenon in a process that uses the hands while leaving the brain mostly free than in processes of more intellectual labor.

And there was the answer as to why attempting to do all 96 of step 1, then all 96 of step 2, felt terrible: Because using the maximum batch size implied that the process was as good as I’d be able to get it, and that any improvements I might think of while working would be wasted if they weren’t backwards-compatible with the steps of the old process that were already completed. Smaller batch sizes, then, have an element of hope to them: There will be a “next time” of the whole process, so thinking about “how I’d do it next time” has a chance to pay off.

pulseaudio & volumio

The speakers in my living room are hooked up to a raspberry pi that runs Volumio. It’s a nice way to play music from various sources without having to physically reconfigure the speakers between inputs.

Volumio as a pulseaudio output

For awhile, my laptop was able to treat Volumio as just another output device, based on the following setup:

- the package pulseaudio-module-zeroconf was installed on the pi and on every laptop that wants to output audio through the living room speakers

- the lines load-module module-zeroconf-publish and load-module module-native-protocol-tcp were added to /etc/pulseaudio/default.pa on the pi

- the line load-module module-zeroconf-discover was added to /etc/pulse/default.pa on my Ubuntu laptop

- pulseaudio was restarted on both devices after these changes (pulseaudio -k to kill, pulseaudio to start it)

starting pulseaudio on boot on Volumio

And then as long as the laptop was connected to the same wifi network as the pi, it Just Worked. Until, in the course of troubleshooting an issue that turned out to involve the laptop having chosen the wrong wifi, I power cycled the pi and it stopped working, because pulseaudio was not yet configured to start on boot.

The solution was to add the following to /etc/systemd/system/pulseaudio.service on the pi:

[Unit]

Description=PulseAudio system server

[Service]

Type=notify

Exec=pulseaudio --daemonize=no --system --realtime --log-target=journal

User=volumio

ExecStart=/usr/bin/pulseaudio

[Install]

And then enabling, starting, and troubleshooting any failures to start:

systemctl --system enable pulseaudio.service

systemctl --system start pulseaudio.service

systemctl status pulseaudio.service -l # explain what went wrong

systemctl daemon-reload # run after editing the .service file

Thanks to Rudd-O’s blog post, which got me 90% of the way to the “start pulseaudio on boot” solution. Apparently systemctl started caring more about having an ExecStart directive since that post was written, which meant I had to inspect the resulting errors, which means I’m writing down the resulting tidbit of knowledge so that I can find it again later.

future work

Nobody in my household has yet found a good way to persuade the Windows computer who lives under the TV to speak pulseaudio yet. If I ever figure that out, I’ll update here.

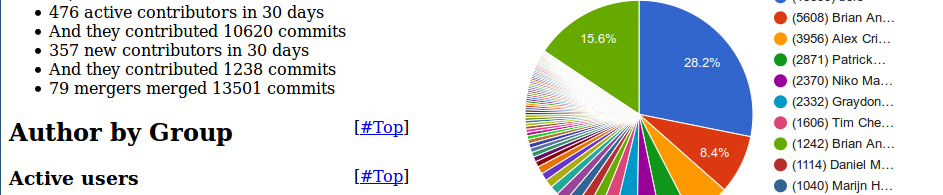

Moving on from Mozilla

Today – Friday, May 22nd, 2020 – is within days of my 5-year anniversary with Mozilla, and it’s also my last day there for a while. Working at Mozilla has been an amazing experience, and I’d recommend it to anyone.

There are some things that Mozilla does extremely well, and I’m excited to spread those patterns to other parts of the industry. And there are areas where Mozilla has room for improvement, where I’d like to see how others address those challenges and maybe even bring back what I learn to Moz someday.

Why go?

When I try to predict what my 2025 or 2030 self will wish I’d done with my career around now, I anticipate that I’ll want access to opportunities which build on a background of technical leadership and mentoring junior engineers.

It wouldn’t be impossible to create these opportunities within Mozilla, but from talking with trusted mentors both inside and outside the company, I’ve concluded that I would get a lot more impact for the same effort if I was working within a growing organization.

As a mature organization, Mozilla’s internal leadership needs are very different from those of a younger and more actively growing company. There’s a far higher bar at Moz for what it takes to be the best person for a task, because the saturation of “best people” is quite high and the prevalence of entirely new tasks is relatively low in comparison. Technical leadership here seems to often require creating a need as well as filling it. At a growing organization, on the other hand, the types and availabilities of such opportunities are very different.

I’m especially looking forward to leveling up on a different stack in my next role, to improve my understanding of the nuances of the underlying problems our technolgies address. I think it’s a bit like learning a second language: only through comparing and contrasting multiple solutions to the same sort of problem can one understand what traits corrolate to all those solutions’ strengths versus which details are simply incidental.

Why now?

I’ll be the first to admit that May 2020 is a really strange time to be changing jobs. But I have an annual tradition of interviewing at several places, learning what their stacks and cultures and unique fractals of tech debt look like, and then turning down an offer or two because changing roles would be a step backwards for both my career development and overall quality of life.

Shortly before the global conference circuit ground to a halt along with everything else, I started interviewing from a DevOps Advocate position, just to explore what it might look like to turn my teaching hobby into a day job. By the time those interviews were complete, the tech evangelism space had been turned inside out and was rapidly reinventing itself, and the skills that qualified me for the old world of devrel were looking less and less like the kind of expertise that might be needed to succeed in the new one. However, a SRE from the technical interviews suggested that I interview for her team, and upon taking that advice I discovered an organization that keeps most of the stuff I loved about Mozilla while also offering the other opportunities that I was looking for.

As with anywhere, there are a few aspects of my new role that I suspect may not be as great yet as where I’m leaving, but these areas of improvement look like things that I’ll be able to have some influence over. Or at least there’ll be room to push the Overton Window in a good direction!

Want more details on the new role? I’ll be writing more about it after I start on June 1st!

Offboarding

Turns out that 5 years at a place gets you a bit of a pile of digital detritus. Future me might want notes on what-all steps I took to remove myself from everything, so here goes:

- GitHub: Clicking the “pull requests” thing in that bar at the top gives a list of all open PRs created by me. I closed out everything work-related, by either finishing or wontfix-ing it. Additionally, I looked through the list of organizations in the sidebar of my account and kicked myself out of owner permissions that I no longer need. Since my GitHub workflow at Mozilla included a separate account for holding admin perms on some organizations, I revoked all of that account’s permissions and then deleted it.

- Google Drive: (because moving documents around through the Google Docs interface is either prohibitively difficult or just impossible) I moved all notes docs that anyone might ever want again into a shared team folder.

- Bugzilla: The “my dashboard” link at the top, when logged in, lists all needinfos and open assigned bugs. I went through all of these and removed the needinfos from closed bugs, changed the needinfos to appropriate people on open bugs, and reassigned assigned bugs to the people who are taking over my old projects. When reassigning, I linked the appropriate notes documents in the bugs and filled in any contextual information that they didn’t capture. I also checked that my Bugzilla admin had removed all settings to auto-assign me bugs in certain components.

- Email deletion prep: I searched for my old work email address in my password manager to find all accounts that were using it. I deleted these accounts or switched them to a personal address, as necessary. It turned out that the only thing I needed to switch over was my Firefox account, which I initially set up to test a feature on a service I supported, but then found very useful.

- Git repos: When purging pull requests and bugs, I pushed my latest work from actively developed branches, so that no work will be lost when I wipe my laptop

- Assorted other perms: Some developers had granted me access to a repo of secrets, so I contacted them to get that access revoked.

- Sharing contact info: I didn’t send an email to the all-company list, but I did email my contact info to my teammates and other colleagues with whom I’d like to keep in touch.

- Take notes on points of contact. While I still have access to internal wikis, I note the email addresses of anyone I may need to contact if there are problems with my offboarding after my LDAP is decommissioned.

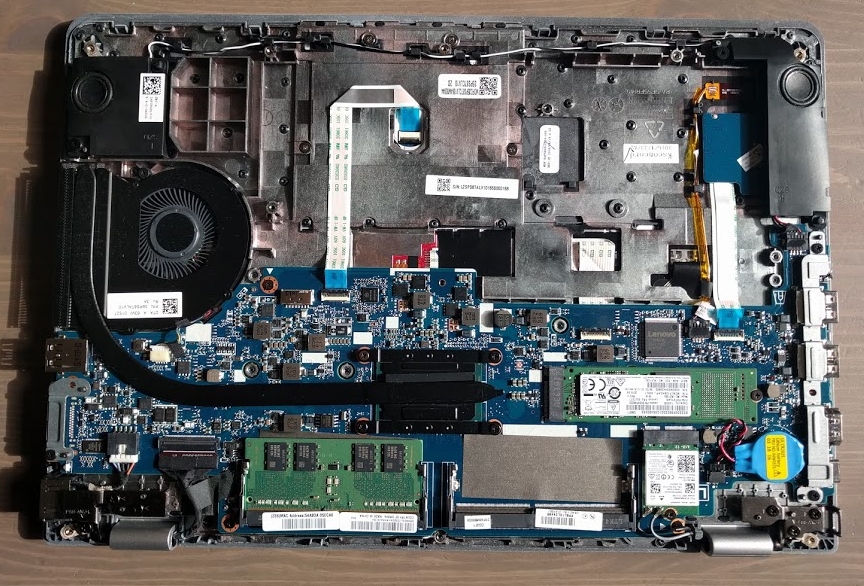

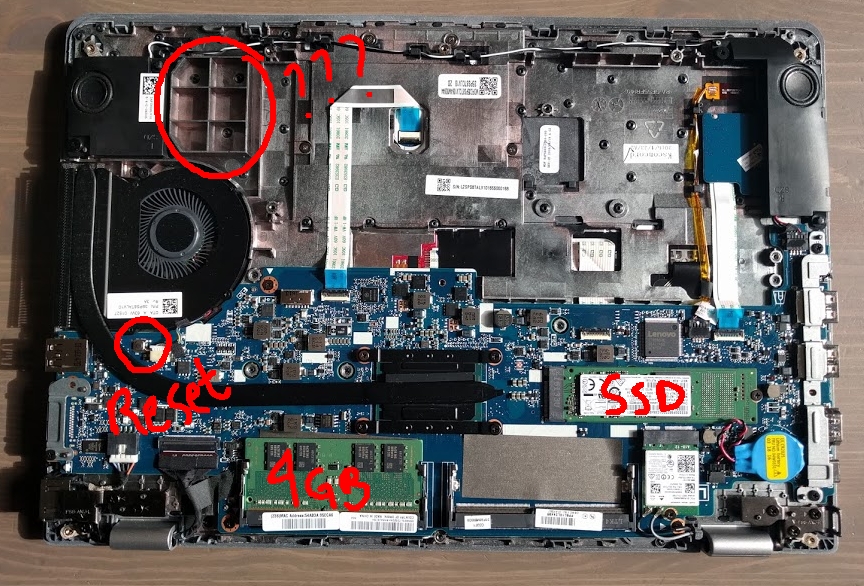

- Wipe the laptop: That’s next. All the repos of Secret Secrets are encrypted on its disk and I’ll lose the ability to access an essential share of the decryption key when my LDAP account goes away, but it’s still best practices to wipe hardware before returning it. So I’ll power it off, boot it from a liveUSB, and then run a few different tools to wipe and overwrite the disk.

Git: moving a module into a monorepo

My team has a repo where we keep all our terraform modules, but we had a separate module off in its own repo for reasons that are no longer relevant.

Let’s call the modules repo git@github.com:our-org/our-modules.git. The module moving into it, let’s call it git@github.com:our-org/postgres-module.git, because it’s a postgres module.

First, clone both repos.:

git clone git@github.com:our-org/our-modules.git

git clone git@github.com:our-org/postgres-module.git

I can’t just add postgres-module as a remote to our-modules and pull from it, because I need the files to end up in a subdirectory of our-modules. Instead, I have to make a commit to postgres-module that puts its files in exactly the place that I want them to land in our-modules. If I didn’t, the README.md files from both repos would hit a merge conflict.

So, here’s how to make that one last commit:

cd postgres-module

mkdir postgres

git mv *.tf postgres/

git mv *.md postgres/

git commit -m "postgres: prepare for move to modules repo"

cd ..

Notice that I don’t push that commit anywhere. It just sits on my filesystem, because I’ll pull from that part of my filesystem instead of across the network to get the repo’s changes into the modules repo:

cd our-modules

git remote add pg ../postgres-module/

git pull pg master --allow-unrelated-histories

git remote rm pg

cd ..

At this point, I have all the files and their history from the postgres module in the postgres directory of the our-modules repo. I can then follow the usual process to PR these changes to the our-modules remote:

cd our-modules

git checkout -b import-pg-module

git push origin import-pg-module

firefox https://github.com/our-org/our-modules/pull/new/import-pg-module

We eventually ended up to skip importing the history on this module, but figuring out how to do it properly was still an educational exercise.

Finding a lost Minecraft base

I happen to administer a tiny, mostly-vanilla Minecraft server. The other day, I was playing there with some friends at a location out in the middle of nowhere. I slept in a bed at the base, thinking that would suffice to get me back again later.

After returning to spawn, installing a warp plugin (and learning that /warp comes from Essentials), rebooting the server, and teleporting to some other coordinates to install their warps, I tried killing my avatar to return it to its bed. Instead of waking up in bed, it reappeared at spawn. Since my friends had long ago signed off for the night, I couldn’t just teleport to them. And I hadn’t written down the base’s coordinates. How could I get back?

Some digging in the docs revealed that there does not appear to be any console command to get a server to disclose the last seen location, or even the bed location, of an arbitrary player to an administrator. However, the server must know something about the players, because it will usually remember where their beds were when they rejoin the game.

On the server, there is a world/playerdata/ directory, containing one file per player that the server has ever seen. The file names are the player UUIDs, which can be pasted into this tool to turn them into usernames. But I skipped the tool, because the last modified timestamps on the files told me which two belonged to the friends who had both been at our base. So, I copied a .dat file that appeared to correspond to a player whose location or bed location would be useful to me. Running file on the file pointed out that it was gzipped, but unzipping it and checking the result for anything useful with strings yielded nothing comprehensible.

The wiki reminded me that the .dat was NBT-encoded. The recommended NBT Explorer tool appeared to require a bunch of Mono runtime stuff to be compatible with Linux, so instead I grabbed some code that claimed to be a Python NBT wrapper to see if it would do anything useful. With some help from its examples, I retrieved the player’s bed location:

from nbt import *

n = nbt.NBTFile("myfile.dat",'rb')

print("x=%s, y=%s, z=%s" % (n["SpawnX"], n["SpawnY"], n["SpawnZ"]))

Teleporting to those coordinates revealed that this was indeed the player’s bed, at the base I’d been looking all over for!

The morals of this story are twofold: First, I should not quit writing down coordinates I care about on paper, and second, Minecraft-adjacent programming is still not my idea of a good time.

Toy hypercube construction

I think hypercubes are neat, so I tried to make one out of string to play with. In the process, I discovered that there are surprisingly many ways to fail to trace every edge of a drawing of a hypercube exactly once with a single continuous line.

kubectl unable to recognize STDIN

Or, Stupid Error Of The Day. I’m talking to a GCP’s Kubernetes engine through several layers of intermediate tooling, and kubectl is failing:

subprocess.CalledProcessError: Command '['kubectl', 'apply', '--record', '-f', '-']' returned non-zero exit status 1.

Above that, in the wall of other debug info, is an error of the form:

error: unable to recognize "STDIN": Get https://11.22.33.44/api?timeout=32s: dial tcp 11.22.33.44:443: i/o timeout

This error turned out to have such a retrospectively obvious fix that nobody else seems to have published it.

When setting up the cluster on which kubectl was failing, I added the IP from which my tooling would access it, and hit the “done” button to save my changes. (That’s under the Authorized Networks section in “kubernetes engine -> clusters -> edit cluster” if you’re looking for it in the GCP console.) However, the “done” button is only one of the two required steps to save changes: One also must scroll all the way to the bottom of the page and press the “save” button there.

So if you’re here because you Googled that error, go recheck that you really do have access to the cluster on which you’re trying to kubectl apply. Good luck!

What to bring to CCC Camp next time

I took last week off work and attended CCC camp, which was wonderful on a variety of axes. I packed light, but through the week I noted some things it’d be worth packing less-lightly for.

So, here are my notes on what it’d be worth bringing if or when I attend it again:

Clothing

The site is dusty, extremely hot through the day, and quite cold at night. Fashion ranges from “generic nerd” to hippie, rave, and un-labelably eccentric. There is probably no wrong thing to wear, though I didn’t see a single suit or tie. A full base layer and a silk sleeping bag liner improve comfort at night. A big hat, or even an umbrella, offers protection from the day star.

I was glad to have 3 pairs of shoes: Lightweight waterproof sandals for showering, sturdier sandals for walking around in all day, and boots for early mornings and late nights. I saw quite a few long coats and even cloaks at night, and their inhabitants all looked very comfortably warm.

Doing sink laundry was more inconvenient at camp than for ordinary travel, and I was glad to have packed to minimize it.

A small comfortable bag, or large pockets in every outfit, are essential for keeping track of one’s wallet, phone, map, and water bottle.

I occasionally found myself wishing that I’d brought a washable dust mask, usually around midafternoon when camp became one big dust cloud.

Campsite Amenities

Covering a tent in space blankets makes it look like a baked potato, but keeps it warm and dark at night and cool through early afternoon. Space blankets are super cheap online, but difficult to find locally.

For a particularly opulent tent experience, consider putting a doormat outside the entrance as a place to remove shoes or clean dusty feet before going inside. I improvised a doormat with a trash bag, which was alright but the real thing would have been nicer to sit on.

Biertisch tables and benches are prevalent around camp, so you can usually find somewhere to sit, but it doesn’t hurt to bring a camp chair of the folding or inflatable variety. Inflatable stuff, from furniture to swimming pools, tended to survive fine on the ground.

I was glad to have brought a full sized towel rather than a tiny travel one. A shower caddy or bag to carry soap, washcloth, hair stuff, and clean clothes would have been handy, though I improvised one from another bag that I had available.

String and duct tape came in predictably handy in customizing my campsite.

Electronics

DECT phones are very fun at camp, but easy to pocket dial with. This is solved by finding the lock feature on the keypad, or picking a flip phone. I was shy about publishing my number and location in the phonebook, but after seeing how helpful the directory was for people to get ahold of new acquaintances for important reasons, I would be more public about my temporary number in the future.

Electricity is a limited resource but sunlight isn’t. Many tents sport portable solar panels. For those whose electronics have non-European plugs, a power strip from home is a good idea.

I packed a small headlamp and used it pretty much every day. Even with it, I found myself occasionally wishing that I’d brought a small LED lantern as well.

A battery to recharge cell phones is good to have as well, especially if you don’t run power to your tent. A battery can be left charging unattended in all kinds of public places where one would never leave one’s phone or laptop.

Food

Potable water is free, both still and sparkling. Perhaps I’ve been spoiled by the quality of the tap water at my home, but I wished that I’d brought water flavorings to mask the local combination of minerals.

I brought a small medical kit, from which I ended up using or sharing some aspirin, ibuprofin, antihistamines, and lots of oral rehydration salt packets.

Meals were available for free (with donations gratefully accepted) at several camps for everyone, and at the Heaven kitchen for volunteers. There were also a variety of food carts with varyingly priced dishes. The food carts outside the gates in front of the venue were good for an icecream or fresh veggie snack, which were harder to find within camp.

Savory meals and all kinds of drinks were everywhere, but there didn’t seem to be any place nearby to just pick up straight chocolate. Small, nonperishable snacks like that are worth getting at a grocery before arrival, since they’re not readily available on the grounds.

Other

If any of the special skills that your nerd friends ask you for help with require tools, bring them. I happen to always carry a needle and thread when traveling, and ended up using them to repair a giant inflatable computer-controlled sculpture.

A hammock, and something to shade it with, came in very handy and would be worth bringing again. There were lots of trees, and it might have been entertaining to set up a slackline for passers-by to fall off of, but I don’t think it’d be worth the weight of carrying one internationally.

Night time is basically a futuristic art show as well as a party. There’s no such thing as too much electroluminescent wire or too many LEDs, whether for decorating your camp or yourself. As a music party, it’s also extremely loud, so I was glad to have brought earplugs. Comfortable earplugs also improve sleep; music goes till 3 or 4 AM in many places and early risers start making noise around 8 or 9.

Camp has a lake, in which it’s popular to float large inflatable animals, especially unicorns. I saw more big inflatable unicorn floaties being used around camps as extra seating than being used in the lake, though.

There’s a railway that goes around camp, and sometimes runs a steam train. I won’t say you should rig a little electric cart to fit its rails and drive around on it, but somebody did and looked like they were having a really wonderful time.

Bikes, and lots of folding bikes, were everywhere. Scooters, skateboards, and all sorts of other wheeled contrivances, often electric, were also prevalent. The only rolling transportation that I didn’t see at all around camp were roller blades and skates, because the ground is probably too rough for them.

I ran out of stickers, and wished I’d brought more. I didn’t see as many pins as some conferences have.

A small notebook also came in handy. Each day, I checked both the stage schedule and the calendar to find the official and unofficial events which looked interesting, and noted their times on paper. It was consistently convenient to have a means of jotting down notes which didn’t risk running out of battery. Flipping through the book afterwards, about 1/4 of its contents is actually pictures I drew to explain various concepts to people I was chatting with, a few pages are daily schedule notes, and the rest is about half notes on things that presenters said and half ideas I jotted down to do something with later.

I was glad to have brought cash rather than just cards, not only for food but also because many workshops had a small fee to cover the cost of the materials that they provided.

Camp Advice

Nobody even tries to maintain a normal sleep schedule. People sleep when they’re tired, and do stuff when they aren’t. Talks and events tend to be scheduled from around noon to around midnight. I don’t think it would be possible to attend camp with a rigorous plan for what to every day and both stick to that plan and get the most out of the experience.

In shared spaces, people pick the lowest common denominator of language – at several workshops, even those initially scheduled to be held in German, presenters proactively asked if any attendees needed it to be in English then switched to English if asked. Behind-the-scenes, such as in the volunteers’ kitchen, I found that this was reversed: Everyone speaks German, and only switches to give you instructions if you specifically ask for English. Plenty of attendees have no German at all and get along fine.

Volunteer! If something isn’t happening how it should, fix it, or ask “how can I help?”. Volunteering an hour or two for filing badges or washing dishes is a great way to make new friends and see another side of how camp works.

More on Mentorship

Last year, I wrote about some of the aspirations which motivated my move from Mozilla Research to the CloudOps team. At the recent Mozilla All Hands in Whistler, I had the “how’s the new team going?” conversation with many old and new friends, and that repetition helped me reify some ideas about what I really meant by “I’d like better mentorship”.

Rustacean Hat Pattern

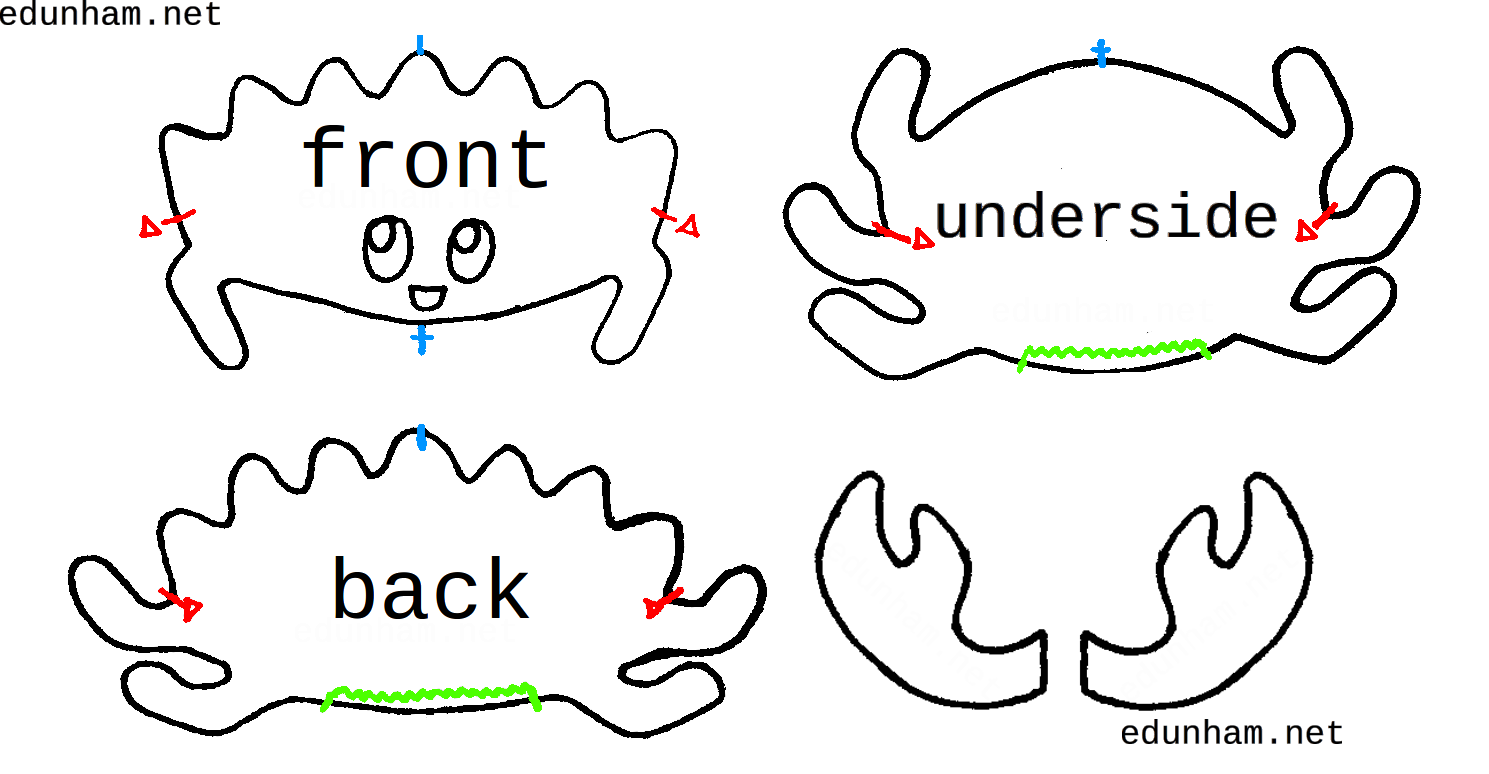

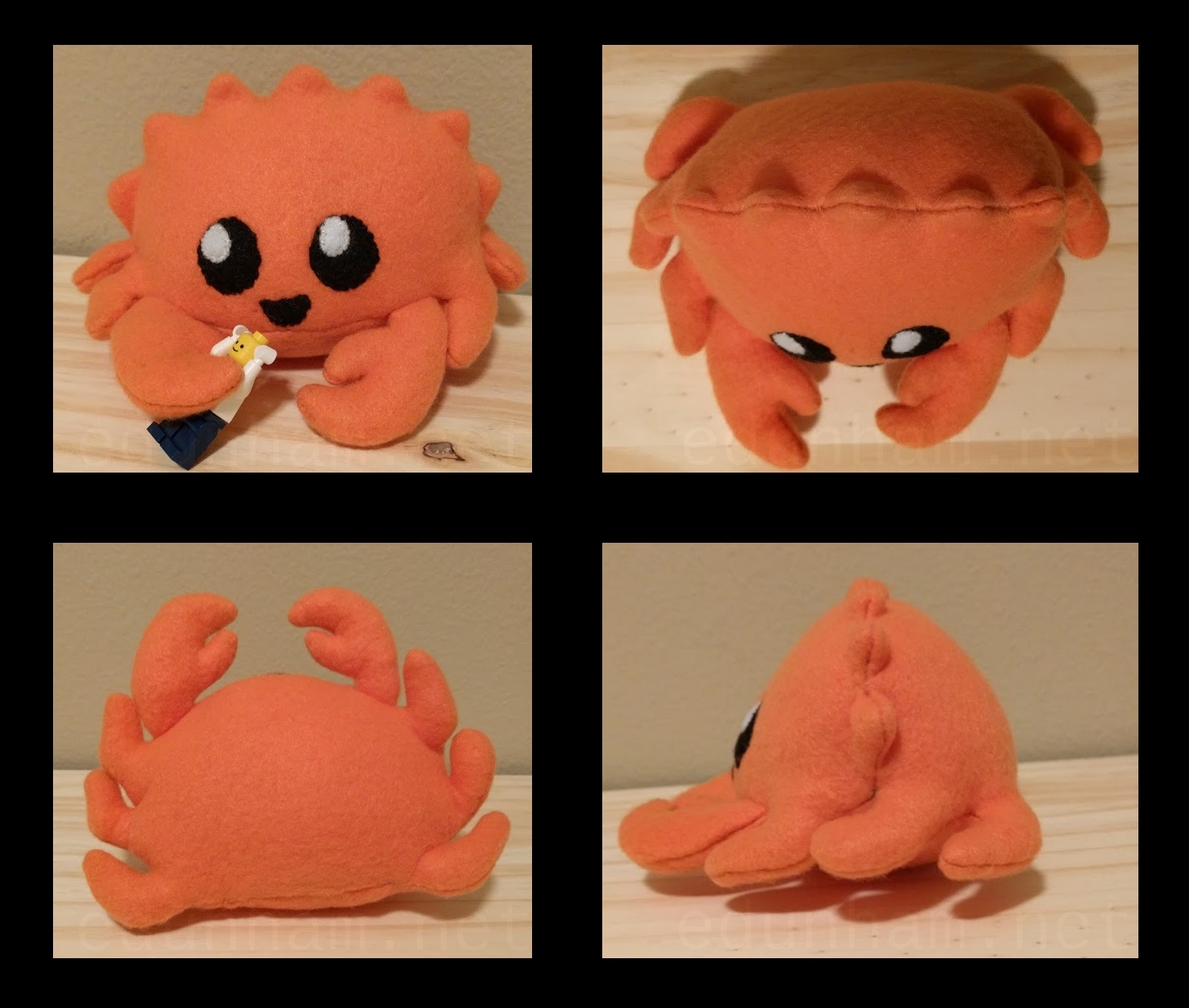

Based on feedback from the crab plushie pattern, I took more pictures this time.

There are 40 pictures of the process below the fold.

When searching an error fails

This blog has seen a dearth of posts lately, in part because my standard post formula is “a public thing had a poorly documented problem whose solution seems worth exposing to search engines”. In my present role, the tools I troubleshoot are more often private or so local that the best place to put such docs has been an internal wiki or their own READMEs.

This change of ecosystem has caused me to spend more time addressing a different kind of error: Those which one really can’t just Google.

Running a Python3 script in the right place every time

I just wrote a thing in a private repo that I suspect I’ll want to use again later, so I’ll drop it here.

The situation is that there’s a repo, and I’m writing a script which shall live in the repo and assist users with copying a project skeleton into its own directory.

The script, newproject, lives in the bin directory within the repo.

The script needs to do things from the root of the repository for the paths of its file copying and renaming operations to be correct.

If it was invoked from somewhere other than the root of the repo, it must thus change directory to the root of the repo before doing any other operations.

The snippet that I’ve tested to meet these constraints is:

# chdir to the root of the repo if needed

if __file__.endswith("/bin/newproject"):

os.chdir(__file__.strip("/bin/newproject"))

if __file__ == "newproject":

os.chdir("..")

In code review, it was pointed out that this simplifies to a one-liner:

os.chdir(os.path.join(os.path.dirname(__file__), '..'))

This will keep working right up until some malicious or misled individual moves the script to an entirely different location within the repository or filesystem and tries to run it from there.

CFP tricks 1

Or, “how to make a selection committee do one of the hard parts of your job as a speaker for you”. For values of “hard parts” that include fine-tuning your talk for your audience.

Skill Tree Balancing with a Job Move

I’ve recently identified some ways in which my former role wasn’t setting me up for career success, and taken steps to remedy them. Since not everybody lucks into this kind of process like I did, I’d like to write a bit about what I’ve learned in case it offers some reader a useful new framework for thinking about their skills and career growth.

Tl;dr: I’m moving from Research to Cloud Ops within Mozilla. The following wall of text and silly picture are a brain dump of new ideas about skills and career growth that I’ve built through the process.

Why an ops career

Disclaimers: Not all tasks that come to a person in an ops role meet my definition of ops tasks. Advanced ops teams move on from simple problems and choose more complex problems to solve, for a variety of reasons. This post contains generalizations, and all generalizations have counter-examples. This post also refers to feelings, and humans often experience different feelings in response to similar stimuli, so yours might not be like mine.

It’s been a great “family reunion” of FOSS colleagues and peers in the OSCON hallway track this week. I had a conversation recently in which I was asked “Why did you choose ops as a career path?”, and this caused me to notice that I’ve never blogged about this rationale before.

Thoughts on retiring from a team

The Rust Community Team has recently been having a conversation about what a team member’s “retirement” can or should look like. I used to be quite active on the team but now find myself without the time to contribute much, so I’m helping pioneer the “retirement” process. I’ve been talking with our subteam lead extensively about how to best do this, in a way that sets the right expectations and keeps the team membership experience great for everyone.

Nota bene: This post talks about feelings and opinions. They are mine and not meant to represent anybody else’s.

Slacking from Irssi

UPDATE: SLACK DECIDED THIS SHOULD NO LONGER BE POSSIBLE AND IT WILL NOT WORK ANY MORE

My IRC client helps me work efficiently and minimize distraction. Over the years, I’ve configured it to behave exactly how I want: Notifying me when topics I care about are under discussion, and equally as important, refraining from notifications that I don’t want. Since my IRC client is developed under the GPL, I have confidence that the effort I put into customizing it to improve my workflow will never be thrown out by a proprietary tool’s business decisions.

But the point of chat is to talk to other humans, and a lot of humans these days are choosing to collaborate on Slack. Slack has its pros and cons, but some of the drawbacks can be worked around using open technologies.

Some northwest area tech conferences and their approximate dates

Somebody asked me recently about what conferences a developer in the pacific northwest looking to attend more FOSS events should consider. Here’s an incomplete list of conferences I’ve attended or hear good things about, plus the approximate times of year to expect their CFPs.

The Southern California Linux Expo (SCaLE) is a large, established Linux and FOSS conference in Pasadena, California. Look for its CFP at socallinuxexpo.org in September, and expect the conference to be scheduled in late February or early March each year.

If you don’t mind a short flight inland, OpenWest is a similar conference held in Utah each year. Look for its CFP in March at openwest.org, and expect the conference to happen around July. I especially enjoy the way that OpenWest brings the conference scene to a bunch of fantastic technologists who don’t always make it onto the national or international conference circuit.

Moving northward, there are a couple DevOps Days conferences in the area: Look for a PDX DevOps Days CFP around March and conference around September, and keep an eye out in case Boise DevOps Days returns.

If you’re into a balance of intersectional community and technical content, consider OSBridge (opensourcebridge.org) held in Portland around June, and OSFeels (osfeels.com) held around July in Seattle.

In Washington state, LinuxFest Northwest (CFP around December, conference around April, linuxfestnorthwest.org) in Bellingham, and SeaGL (seagl.org, CFP around June, conference around October) in Seattle are solid grass-roots FOSS conferences. For infosec in the area, consider toorcamp (toorcamp.toorcon.net, registration around March, conference around June) in the San Juan Islands.

And finally, if a full conference seems like overkill, considering attending a BarCamp event in your area. Portland has CAT BarCamp (catbarcamp.org) at Portland State University around October, and Corvallis has Beaver BarCamp (beaverbarcamp.org) each April.

This is by no means a complete list of conferences in the area, and I haven’t even tried to list the myriad specialized events that spring up around any technology. Meetup, and calagator.org for the Portland area, are also great places to find out about meetups and events.

User is not authorized to perform iam:ChangePassword.

Summary: A user who is otherwise authorized to change their password may get this error when attempting to change their password to a string which violates the Password Policy in your IAM Account Settings.

So, I was setting up the 3rd or 4th user in a small team’s AWS account, and I did the usual: Go to the console, make a user, auto-generate a password for them, tick “force them to change their password on next login”, chat them the password and an admonishment to change it ASAP.

It’s a compromise between convenience and security that works for us at the moment, since there’s all of about 10 minutes during which the throwaway credential could get intercepted by an attacker, and I’d have the instant feedback of “that didn’t work” if anyone but the intended recipient performed the password change.

So, the 8th or 10th user I’m setting up, same way as all the others, gets that error on the change password screen: “User is not authorized to perform iam:ChangePassword”. Oh no, did I do their permissions wrong? I try explicitly attaching the Amazon’s IAMUserChangePassword policy to them, because that should fix their not being authorized, right? Wrong; they try again and they’re still “not authorized”.

OK, I have their temp password because I just gave it to them, so I’ll pop open private browsing and try logging in as them.

When I try putting in the same autogenerated password at the reset screen, I get “Password does not conform to the account password policy.”. This makes sense; there’s a “prevent password reuse” policy enabled under Account Settings within IAM.

OK, we won’t reuse the password. I’ll just set it to that most seekrit string, “hunter2”. Nope, the “User is not authorized to perform iam:ChangePassword” is back. That’s funny, but consistent with the rules just being checked in a slightly funny order.

Then, on a hunch, I try the autogenerated password with a 1 at the end as the new password. It changes just fine and allows me to log in! So, the user did have authorization to change their password all along... they were just getting an actively misleading error message about what was going wrong.

So, if you get this “User is not authorized to perform iam:ChangePassword” error but you should be authorized, take a closer look at the temporary password that was generated for you. Make sure that your new password matches or exceeds the old one for having lowercase letters, uppercase letters, numbers, special characters, and total length.

When poking at it some more, I discovered that one also gets the “User is not authorized to perform iam:ChangePassword” message when one puts an invalid value into the “current password” box on the change password screen. So, check for typos there as well.

This yak shave took about an hour to pin down the fact that it was the contents of the password string generating the permissions error, and I haven’t been able to find the error string in any of Amazon’s actual documentation, so hopefully I’ve said “User is not authorized to perform iam:ChangePassword” enough times in this post that it pops up in search results for anyone else frustrated by the same challenge.

Better remote teaming with distributed standups

Agile development’s artifact of the daily stand-up meeting is a great idea. In theory, the whole team should stand together (sitting or eating makes meetings take too long) for about 5 minutes every morning. Each person should comment on:

- What they did since yesterday

- What they plan on doing today

- Any blockers, thigns they’re waiting on to be able to get work done

- Anything else

And then, 5 minutes later, everybody gets back to work. But do they really?

Saying Ping

There’s an idiom on IRC, and to a lesser extent other more modern communication media, where people indicate interest in performing a real-time conversation with someone by saying “ping” to them. This effectively translates to “I would like to converse with you as soon as you are available”.

The traditional response to “ping” is to reply with “pong”. This means “I am presently available to converse with you”.

If the person who pinged is not available at the time that the ping’s recipient replies, what happens? Well, as soon as they see the pong, they re-ping (either by saying “ping” or sometimes “re-ping” if they are impersonating a sufficiently complex system to hold some state).

This attempt at communication, like “phone tag”, can continue indefinitey in its default state.

It is an inefficient use of both time and mental overhead, since each missed “ping” leaves the recipient with a vague curiosity or concern: “I wonder what the person who pinged wanted to talk to me about...”. Additionally, even if both parties manage to arrange synchronous communication at some point in the future, there’s the very real risk that the initiator may forget why they originally pinged at all.

There is an extremely simple solution to the inefficiency of waiting until both parties are online, which is to stick a little metadata about your question onto the ping. “Ping, could you look issue # xyz?” “Ping, can we chat about your opinions on power efficiency sometime?”. And yet there appears to be a decent correlation between people I regard as knowing more than I do about IRC etiquette, and people who issue pings without attaching any context to them.

If you do this, and happen to read this, could you please explain why to me sometime?

Resumes: 1 page or more?

Some of my IRC friends are job hunting at the moment, so I’ve been proofreading resumes. These friends are several years into their professional careers at this point, and I’ve found it really interesting to see what they include and exclude to make the best use of their resumes’ space.

Opinion: Levels of Safety Online

The Mozilla All-Hands this week gave me the opportunity to explore an exhibit about the “Mozilla Worldview” that Mitchell Baker has been working on. The exhibit sparked some interesting and sometimes heated discussion (as direct result of my decision to express unpopular-sounding opinions), and helped me refine my opinions on what it means for someone to be “safe” on the internet.

Spoiler: I think that there are many different levels of safety that someone can have online, and the most desirable ones are also the most difficult to attain.

Obligatory disclaimer: These are my opinions. You’re welcome to think I’m wrong. I’d be happy to accept pull requests to this post adding tools for attaining each level of safety, but if you’re convinced I’m wrong, the best place to say that would be your own blog. Feel free to drop me a link if you do write up something like that, as I’d enjoy reading it!

Salt: Successful ping but highstate says “minion did not return”

Today I was setting up some new OSX hosts on Macstadium for Servo’s build cluster. The hosts are managed with SaltStack.

After installing all the things, I ran a test ping and it looked fine:

user@saltmaster:~$ salt newbuilder test.ping

newbuilder:

True

However, running a highstate caused Salt to claim the minion was non-responsive:

user@saltmaster:~$ salt newbuilder state.highstate

newbuilder:

Minion did not return. [No response]

Googling this problem yielded a bunch of other “minion did not return” kind of issues, but nothing about what to do when the minion sometimes returns fine and other times does not.

The fix turned out to be simple: When a test ping succeeds but a longer-running state fails, it’s an issue with the master’s timeout setting. The timeout defaults to 5 seconds, so a sufficiently slow job will look to the master like the minion was unreachable.

As explained in the Salt docs, you can bump the timeout by adding the line timeout: 30 (or whatever number of seconds you choose) to the file /etc/salt/master on the salt master host.

Advice on storing encryption keys

I saw an excellent question get some excellent infosec advice on IRC recently. I’m quoting the discussion here because I expect that I’ll want to reference it when answering others’ questions in the future.

Tech Internship Hunting Ideas

A question from a computer science student crossed one of my IRC channels recently:

Them: what is the best way to fish for internships over the summer?

Glassdoor?

Me: It depends on what kind of internship you're looking for. What kind of

internship are you looking for?

Them: Computer Science, anything really.

This caused me to type out a lot of advice. I’ll restate and elaborate on it here, so that I can provide a more timely and direct summary if the question comes up again.

Philosophy of Job Hunting

My opinion on job hunting, especially for early-career technologists, is that it’s important to get multiple offers whenever possible. Only once one has a viable alternative can one be said to truly choose a role, rather than being forced into it by financial necessity.

In my personal experience, cultivating multiple offers was an important step in disentangling impostor syndrome from my career choices. Multiple data points about one’s skills being valued by others can help balance out an internal monologue about how much one has yet to learn.

If you disagree that cultivating simulataneous opportunities then politely declining all but the best is a viable internship hunting strategy, the rest of this post may not be relevant or interesting to you.

Identifying Your Options

To get an internship offer, you need to make a compelling application to a company which might hire you. I find that a useful first step is to come up with a list of such companies, so you can study their needs and determine what will make your application interest them.

Use your social network. Ask your peers about what internships they’ve had or applied for. Ask your mentors whether they or their friends and colleagues hire interns.

When you ask someone about their experience with a company, remember to ask for their opinion of it. To put that opinion into perspective, it’s useful to also ask about their personal preferences for what they enjoy or hate about a workplace. Knowing that someone who prefers to work with a lot of background noise enjoyed a company’s busy open-plan office can be extremely useful if you need silence to concentrate! Listening with interest to a person’s opinions also strengthens your social bond with them, which never hurts if it turns out they can help you get into a company that you feel might be a good fit.

Use LinkedIn, Hacker News, Glassdoor, and your city’s job boards. The broader a net you cast to start with, the better your chances of eventually finding somewhere that you enjoy. If your job hunt includes certain fields (web dev, DevOps, big data, whatever), investigate whether there’s a meetup for professionals in that field in your region. If you have the opportunity to give a short talk on a personal project at such a meetup, do it and make sure to mention that you’re looking for an internship.

Identify your own priorities

Now that you have a list of places which might concievably want to hire you, it’s time to do some introspection. For each field that you’ve found a prospective company in, try to answer the question “What makes you excited about working here?”.

You do not have to have know what you want to do with your life to know that, right now, you think DevOps or big data or frontend development is cool.

You do not have to personally commit to a single passion at the expense of all others – it’s perfectly fine to be interested in several different languages or frameworks, even if the tech media tries to pit them against each other.

However, for each application, it’s prudent to only emphasize your interests in that particular field. It’s a bit of a faux pas to show up to a helpdesk interview and focus the whole time on your passion for building robots, or vice versa. And acting equally interested in every other field will cause an employer to doubt that you’re necessarily the best fit for a specialized role... So in an interview, try not to stray too far from the value that you’re able to deliver to that company.

This is also a good time to identify any deal-breakers that would cause you to decline a prospective internship. Are you ok with relocating? Is there some tool or technology that would cause you to dread going to work every day?

I personally think that it’s worth applying even to a role that you know you wouldn’t accept an offer from when you’re early in your career. If they decide to interview you, you’ll get practice experiencing a real interview without the pressure of “I’ll lose my chance at my dream job if I mess this up!”. Plus if they extend an offer to you, it can help you calibrate the financial value of your skills and negotiate with employers that you’d actually enjoy.

Craft an excellent resume

I talk about this elsewhere.

There are a couple extra notes if you’re applying for an internship:

1) Emphasize the parts of your experience that relate most closely to what each employer values. If you can, it’s great to use the same words for skills that were used in the job description.

2) The bar for what skills go on your resume is lower when you have less experience. Did you play with Docker for a weekend recently and use it to deploy a toy app? Make sure to include that experience.

Practice, Practice, Practice

If you’re uncomfortable with interviewing, do it until it becomes second nature. If your current boss supports your internship search, do some mock interviews with them. If you’re nervous about things going wrong, have a friend roleplay as a really bad interview with you to help you practice coping strategies. If you’ll be in front of a panel of interviewers, try to get a panel of friends to gang up on you and ask tough questions!

To some readers this may be obvious, but to others it’s worth pointing out that you should also practice wearing the clothes that you’ll wear to an interview. If you wear a tie, learn to tie it well. If you wear shirts or pants that need to be ironed, learn to iron them comptently. If you wear shoes that need to be shined, learn to shine them. And if your interview will include lunch, learn to eat with good table manners and avoid spilling food on yourself.

Yes, the day-to-day dress codes of many tech offices are solidly in the “sneakers, jeans, and t-shirt” category for employees of all levels and genders. But many interviewers, especially mid- to late-career folks, grew up in an age when dressing casually at an interview was a sign of incompetence or disrespect. Although some may make an effort to overcome those biases, the subconscious conditioning is often still there, and you can take advantage of it by wearing at least business casual.

Apply Everywhere

If you know someone at a company where you’re applying, try to get their feedback on how you can tailor your resume to be the best fit for the job you’re looking at! They might even be able to introduce you personally to your potential future boss.

I think it’s worth submitting a good resume to every company which you identify as being possibly interested in your skills, even the ones you don’t currently think you want to work for. Interview practice is worth more in potential future salary than the hours of your time it’ll take at this point in your career.

Follow Up

If you don’t hear back from a company for a couple weeks, a polite note is order. Restate your enthusiasm for their company or field, express your understanding that there are a lot of candidates and everything is busy, and politely solicit any feedback that they may be able to offer about your application. A delayed reply does not always mean rejection.

If you’re rejected, follow up to thank HR for their time.

If you’re invited to interview, reply promptly and set a time and date. For a virtual or remote interview, only offer times when you’ll have access to a quiet room with a good network connection.

Interview Excellently

I don’t have any advice that you won’t find a hundred times over on the rest of the web. The key points are:

- Show up on time, looking respectable

- Let’s hope you didn’t lie on your resume

- Restate each question in your answer

- It’s ok not to know an answer – state what you would do if you encountered the problem at work. Would you Google a certain phrase? Ask a colleague? Read the manual?

- Always ask questions at the end. When in doubt, ask your interviewer what they enjoy about working for the company.

Keep Following Up

After your interview, write to whoever arranged it and thank the interviewers for their time. For bonus points, mention something that you talked about in the interview, or include the answer to a question that you didn’t know off the top of your head at the time.

Getting an Offer

Recruiters don’t usually like to disclose the details of offers in writing right away. They’ll often phone you to talk about it. You do not have to accept or decline during that first call – if you’re trying to stall for a bit more time for another company to get back to you, an excuse like “I’ll have to run that by my family to make sure those details will work” is often safe.

Remember, though, that no offer is really a job until both you and the employer have signed a contract.

Declining Offers

If you’ve applied to enough places with a sufficiently compelling resume, you’ll probably have multiple offers. If you’re lucky, they’ll all arrive around the same time.

If you wish to decline an offer from a company whom you’re certain you don’t want to work for, you can practice your negotiation skills. Read up on salary negotiation, try to talk the company into making you a better offer, and observe what works and what doesn’t. It’s not super polite to invest a bunch of their time in negotiations and then turn them down anyway, which is why I suggest only doing this to a place that you’re not very fond of.

To decline an offer without burning any bridges, be sure to thank them again for their time and regretfully inform them that you’ll be pursuing other opportunities at this time. It never hurts to also do them a favor like recommending a friend who’s job hunting and might be a good fit.

Again, though, don’t decline an offer until you have your actual job’s contract in writing.

Rust’s Community Automation

Here’s the text version, with clickable links, of my Automacon lightning talk today.

Setting a Freenode channel’s taxonomy info

Some recent flooding in a Freenode channel sent me on a quest to discover whether the network’s services were capable of setting a custom message rate limit for each channel. As far as I can tell, they are not.

However, the problem caused me to re-read the ChanServ help section:

/msg chanserv help

- ***** ChanServ Help *****

- ...

- Other commands: ACCESS, AKICK, CLEAR, COUNT, DEOP, DEVOICE,

- DROP, GETKEY, HELP, INFO, QUIET, STATUS,

- SYNC, TAXONOMY, TEMPLATE, TOPIC, TOPICAPPEND,

- TOPICPREPEND, TOPICSWAP, UNQUIET, VOICE,

- WHY

- ***** End of Help *****

Taxonomy is a cool word. Let’s see what taxonomy means in the context of IRC:

/msg chanserv help taxonomy

- ***** ChanServ Help *****

- Help for TAXONOMY:

-

- The taxonomy command lists metadata information associated

- with registered channels.

-

- Examples:

- /msg ChanServ TAXONOMY #atheme

- ***** End of Help *****

Follow its example:

/msg chanserv taxonomy #atheme

- Taxonomy for #atheme:

- url : http://atheme.github.io/

- ОХЯЕБУ : лололол

- End of #atheme taxonomy.

That’s neat; we can elicit a URL and some field with a cryllic and apparently custom name. But how do we put metadata into a Freenode channel’s taxonomy section? Google has no useful hits (hence this blog post), but further digging into ChanServ’s manual does help:

/msg chanserv help set

- ***** ChanServ Help *****

- Help for SET:

-

- SET allows you to set various control flags

- for channels that change the way certain

- operations are performed on them.

-

- The following subcommands are available:

- EMAIL Sets the channel e-mail address.

- ...

- PROPERTY Manipulates channel metadata.

- ...

- URL Sets the channel URL.

- ...

- For more specific help use /msg ChanServ HELP SET command.

- ***** End of Help *****

Set arbirary metadata with /msg chanserv set #channel property key value

The commands /msg chanserv set #channel email a@b.com and /msg chanserv set #channel property email a@b.com appear to function identically, with the former being a convenient wrapper around the latter.

So that’s how #atheme got their fancy cryllic taxonomy: Someone with the appropriate permissions issued the command /msg chanserv set #atheme property ОХЯЕБУ лололол.

Behaviors of channel properties

I’ve attempted to deduce the rules governing custom metadata items, because I couldn’t find them documented anywhere.

- Issuing a set property command with a property name but no value deletes the property, removing it from the taxonomy.

- A property is overwritten each time someone with the appropriate permissions issues a /set command with a matching property name (more on the matching in a moment). The property name and value are stored with the same capitalization as the command issued.

- The algorithm which decides whether to overwrite an existing property or create a new one is not case sensitive. So if you set ##test email test@example.com and then set ##test EMAIL foo, the final taxonomy will show no field called email and one field called EMAIL with the value foo.

- When displayed, taxonomy items are sorted first in alphabetical order (case insensitively), then by length. For instance, properties with the names a, AA, and aAa would appear in that order, because the initial alphebetization is case-insensitive.

- Attempting to place mIRC color codes in the property name results in the error “Parameters are too long. Aborting.” However, placing color codes in the value of a custom property works just fine.

Other uses

As a final note, you can also do basically the same thing with Freenode’s NickServ, to set custom information about your nickname instead of about a channel.

Adventures in Mercurial